Issue #32: Reviewing 2023 and Not-So-Bold 2024 Predictions

Plus: Make a Massive Impact on the Bottom Line with Analytics, Understanding Data Lakehouses, Idempotence Explained, Spatiotemporal Data Analysis, Composable Data Systems and New Snowflake Git Feature

Hi all, happy new year*! In this newsletter, we have:

Reviewing 2023 and Not-So-Bold 2024 Predictions

How Analytics Can Make a Massive Impact on the Bottom Line

Understanding Parquet, Iceberg and Data Lakehouses

Four pitfalls of spatiotemporal data analysis and how to avoid them

Data Explained: Idempotence

Is it Time for Composable Data Systems?

DevOps in Snowflake: How Git and Database Change Management enable a file-based object lifecycle

*To those that follow the Gregorian calendar

Reviewing 2023 and Not-So-Bold 2024 Predictions

It’s that time of the year when loads of experts make bold predictions that 2024 is going to be the year of “X“ (and often X is also conveniently the exact thing the expert is selling to you).

Joe Reis makes an excellent argument about not bothering with any predictions, but new year predictions also give us a chance to reflect on the general state of data and what trends have been appearing. After all, 2024 is just a number, so expect many of the trends of 2023 to keep going into the new year.

So the list below is more of a “list of what is currently happening and will continue to happen in data, according to me“:

Generative AI had a big breakout in 2023, though I can see a lot of firms ending up in the “trough of disillusionment” in 2024 without a sensible data and AI strategy.

Relatedly, the phrase “Intelligent Data Platform“ has been thrown around a lot recently, though if I were being cynical, this is yet to mean any major differences to platform architecture beyond a few AI features being integrated into existing Data Platforms components. I’ll likely write a full article on this later.

Last year didn’t have too much breakthrough innovation in data outside of generative AI to me. Some people might see this as a good thing: hopefully data companies are trying to make their existing products and features better rather than trying to reinvent the wheel every financial quarter.

Related to the above, there has been a strong trend in the last year or two to figure out how to get a good Return on Investment (ROI) from a Data Platform rather than just building one and hoping it makes everything better.

Will there be a new game-changing innovation in data in the next year? I don’t know; it’s like guessing when a black swan event will occur.

Data Mesh hype seems to have died down a bit, though the idea of Data Products is still a popular concept. I think the Data Mesh architecture has a lot of merit but only makes sense for high-maturity, highly distributed organisations that are very data-driven.

Cloud Data Warehouses and Lakehouses companies are still battling it out, though neither technology has significantly more mindshare than the other, in my opinion. They are also increasingly taking each other’s features, so the lines between them are becoming increasingly blurry.

Real-time streaming has been slowly growing but struggles to fully replace batch processing in most pipelines. Also, streaming-only products will have to find a way to compete with the “good enough” streaming offered by Databricks and Snowflake.

Data Modelling will keep making a sort of comeback after being somewhat sidelined by One Big Table models and query-driven analytics.

Data Governance will keep getting more important as more controls are needed to keep Generative AI usage in check. Though separate data catalogues are still too expensive for most firms. What we are already seeing, on the other hand, is data catalogue features being added to data processing engines, like Starburst Gravity or Unity Catalog.

In DevOps / Platform, Terraform is still king, though it may have a serious challenge in OpenTofu. Platform Engineering is gaining more traction as a replacement for DevOps, though I expect progress to be slow in the next year as I feels like it’s only needed for the biggest and most mature cloud platforms.

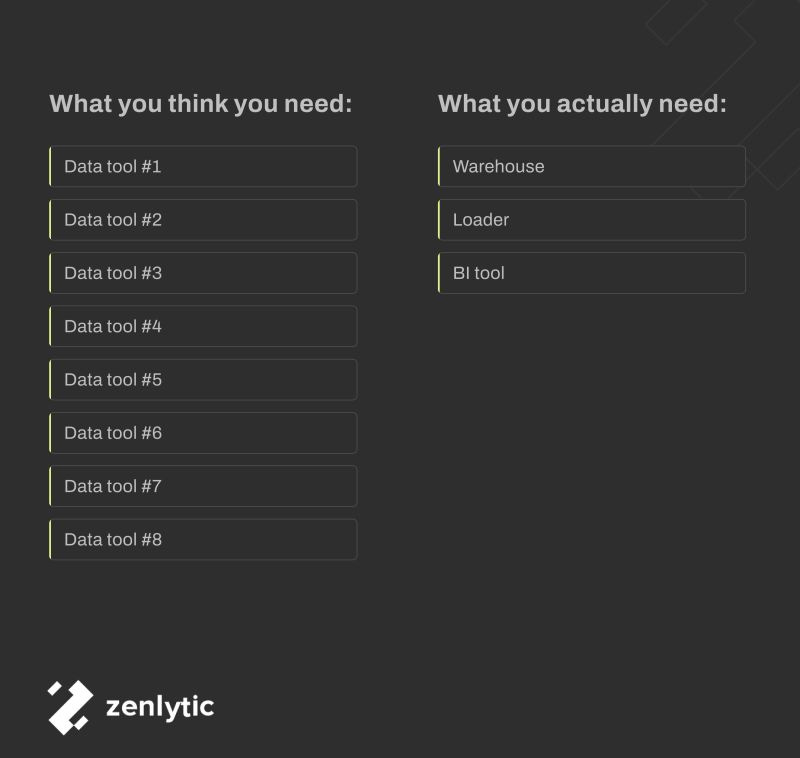

Data quality is still very important, but like Data Catalogs, buying separate data quality products sometimes feels like a step too far for a lot of organisations, as they want to keep their data stack as simple and/or cheap as possible. Most seem happy with using free libraries or custom code where they can.

So not too many wild surprises above if you already follow existing data trends, but that’s the point: most people don’t like to make big gambles when spending on IT.

How Analytics Can Make a Massive Impact on the Bottom Line

Ergest Xheblati, Solutions Architect at Wave40, shows how finding constraints in a process via Data Analytics can have major impacts on a company.

I’d also like to note that this article reminds me how important it is to capture your whole business process where possible in data; otherwise, it’s tricky to see how actions taken upstream affect downstream data, processes, and outputs.

Understanding Parquet, Iceberg and Data Lakehouses

Those coming from a Databases and/or Data Warehouse background can struggle with the concept of Data Lakehouses, and David Gomes, Director of Engineering at SingleStore, admits he was one of those people and wrote this informative article to help their understanding.

I’ve also tried my hand at explaining Lakehouses and how they compare to Warehouses and Databases, if you’re looking for another take on the topic.

Four pitfalls of spatiotemporal data analysis and how to avoid them

Analysing data based on time (temporal / time-series) can be tricky, and analysing data on geospatial data can be even more painful, so if you have to analyse data on both dimensions (spatiotemporal), then you could be in for a whole world of pain.

Gregor Aisch, former founder and CTO of Datawrapper, gives tips on how avoid common issues with spatiotemporal data.

Data Explained: Idempotence

Yes, I’m linking to another post by Matt Palmer, but he’s a great writer, and I think impotence is a) a key ingredient for building robust data pipelines and b) a concept that is not talked about enough in data.

Is it Time for Composable Data Systems?

While the last few years have proven there is a market for Data Lakehouses that has the main benefit of decoupling the storage and compute layers, should we go further and decouple the user interface, compute engine, and storage into three different composable layers?

Jordan Volz, Head of Field Engineering at Voltron Data, makes a case for them.

DevOps in Snowflake: How Git and Database Change Management enable a file-based object lifecycle

Vincent Raudszus, Software Engineer at Snowflake walks us through how to add git version control in Snowflake just using SQL commands, which I’ve never seen before (note it is currently only in private preview).

I see other data-processing products adopting this approach in the near future.

Sponsored by The Oakland Group, a full-service data consultancy. Download our guide or contact us if you want to find out more about how we build data platforms!

Photo by Moritz Knöringer on Unsplash