How to Build a Data Platform: DataOps

What is DevOps and DataOps?, Why Do I Need them?, DataOps Metrics, Developer Environments and Speed vs. Reliability vs. Budget Trade-offs

Why Should I Automate My Data Platform?

First, let’s talk about what aspects of a Data Platform often get automated:

Updates to the Data Platform code: data transformations, pipelines and infrastructure

Schema migrations

Security Scanning (scanning for vulnerabilities in internal and external code libraries and platform configuration)

Code Linting for code consistency and complete documentation

Testing

All of the above can be done manually, but it requires a developer to actively do it. So if you automate the above tasks, you potentially free up more of your developer’s time, though we should remember that sometimes a task is more effort to automate than to do manually:

But time isn’t the only benefit, traceability is another major benefit, as manual tasks are hard to audit. For example it may be difficult to trace when and who made the change that caused issues with the Data Platform, but with automation, you can add monitoring of updates.

There can be a security benefit too to automation: the more you automate, the fewer changes need to be made directly to production, so it can be possible to remove developers direct access to Data Platforms making your platform more secure as you reduce the attack surface.

It is often recommended for any production software system that access is limited as much as possible; only the automation server has access and time-limited access for developers in emergencies.

What is DataOps and why might I need it?

DataOps translates to Data Operations, which is the act of doing automated data operations on a Data Platform so the changes are done faster, more securely and more reliably than doing the same operations manually.

It also sets out an agile culture of shipping early to get customer feedback as early as possible and a process for making and tracking changes in small iterations, so you can adapt quickly to business and technology changes while maintaining quality software.

It is based on DevOps, which is Developer Operations, and to be honest, the differences between DevOps and DataOps are not massive.

We prefer to have data teams implement their own DataOps processes, with the Platform Engineering team providing common services such as user admin, firewalls or logging across the organisation (though in small to medium organisations, data teams may manage the platform as well).

Having a separate team for building DataOps processes can create a slower, more waterfall process where the data has to wait for the DevOps team to make their changes for any changes in infrastructure or automation.

DataOps Manifesto

While every organisation will implement DataOps differently, there is a DataOps manifesto that can be an excellent starting point for most teams.

Though at its core the DataOps philosophy to me is these 5 main points:

Always design, build and support while thinking about the customer and business requirements.

Get feedback as often as you can from all stakeholders to continually improve.

Data teams need the power to build, run and test as much of their solutions themselves as possible: waiting for IT, Security, Business Domain etc. will slow them down, reduce innovation and fail to keep up with business and technology changes.

Embrace changes as technology, data and the business will never stop changing.

It’s a team effort - balance sufficient communication while allowing time for people to get work done.

Achieving the above can be difficult in certain organizations, especially in highly regulated environments, so adapt as needed, but we think this should be your ultimate aim.

In our experience, the hardest change is culture and process, as we’ve seen many DataOps or DevOps initiatives fail as they were shoehorned into a Waterfall process.

The other thing to note is that the faster you develop, the happier your developers and data consumers will be:

Developers want to build cool features they can deploy quickly, not be stuck doing monotonous manual tasks

Data consumers want the platform to stay up-to-date as possible so they have most up-to-date data to make decisions.

Happier employees are more productive, creating a positive feedback loop.

DataOps Metrics

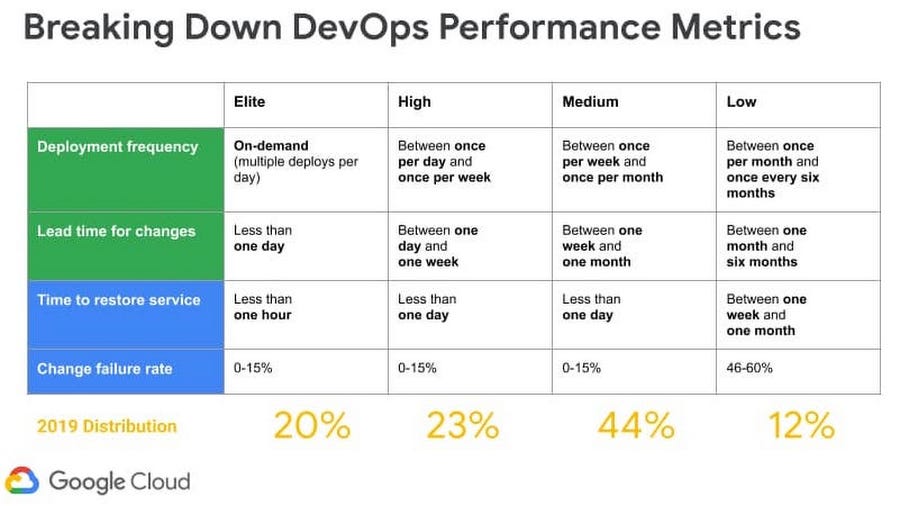

One of the core tenants of DevOps and DataOps is to continually improve developer practices and metrics can help keep track of whether your team is continually improving or not.

You do have to be careful with productivity metrics, as sometimes there can be mitigating circumstances that reduce developer productivity, like illness or especially difficult requirements.

But without hard data, it is hard to know whether any changes to developer practices and process are working.

But what sort of metrics could you use to track? Well, we can take some inspiration from DevOps metrics and adapt them to data:

Number of changes per developer that cause issues over a time period: ideally, you’ll want this to be stable at a low level; if you see this rise then you may want to prioritize reliability over new features until this number comes down.

Deployment frequency per developer over a time period: or the number of changes or improvements pushed to the Data Platform. There can be number of reasons for this to drop, so view with caution, such as an issue with the DataOps process, the Data Platform technology or requirements, among others

Lead time for changes: how long does a requirement go from being accepted to being deployed into production? Very important, as the quicker you can react to changes made in the business, the more valuable your Data Platform is.

Time to restore service: how quickly can you recover from failure? It can be important if the analytical data you hold is of critical importance to the business, though it’s often expensive to have backup servers and databases to recover from failure quickly.

Number of Data Quality issues per developer over a time period: how trustworthy is your data? Again, if you see this rising, then you may want to prioritise data reliability over new features until it comes down.

Note that it costs money and time to track these measures, so we’ve come across many Data Platforms (usually small, older platforms) that do not track the above fully or at all and therefore use the “gut feel” of the Engineers and Managers to decide when to prioritise reliability over new features.

Though large, complex Data Platforms will be more in need of the above because one person or team cannot truly grasp what is happening until they start measuring the above, it also gives you hard evidence when building a business case for Data Platform improvement and investment.

How do you Automate Updates to Your Data Platform?

First, start with building a pipeline to put all your deployment tasks in: Continuous Integration/Continuous Development (CI/CD) pipelines automatically deploy infrastructure, code, database schema and data loading in one pipeline.

The pipeline can be kicked off automatically by a code change (commit) or manually.

You’ll build the infrastructure for your Data Platform first and the most automated and reliable way to build infrastructure such as compute (servers), data storage, databases and networking between them is with Infrastructure as Code (IaC).

Then you can deploy your software (Data Pipelines and calculations) on top of the infrastructure.

It is also common to run a battery of tests after the infrastructure and software are deployed to a test environment: this will ensure your Data Platform works as expected and meets its requirements.

Once the tests pass, there is likely one or more manual review processes before deploying to a staging, User Assessment Testing (UAT) or production environment.

One Way and Two Way Decisions

You’ll likely want to have more checks for certain kinds of changes that are hard to reverse, such as large data migrations, which Amazon Engineering calls ‘one-way decisions’. They also call changes that can be reversed ‘two way decisions‘ and these changes should be more automated with less checks as they can be reverted.

It’s goes without saying you should always be looking to turn one way decisions into two-way decisions wherever possible.

How many environments do you build? The balance between infrastructure cost and developer productivity

This is a bit of a digression, but a important one, as one of my biggest pet peeves is not having the budget for enough environments for a Data Platform, slowing down developers who cost 10x to 100x more per hour.

If you build just one environment for your Data Platform, you have to be very careful that any changes you make will not break the Data Platform, especially any changes that are irreversible (for example, deleting data) though try to keep any irreversible changes to a minimum as well!

So you may build a test and/or staging environment so any changes can be tested before going live; however, this doesn’t scale on it’s own as you’ll likely want to develop as well as allow for testing and you don’t want your development to affect testing, so you build a development environment as well.

Now you have two or three environments, but more may be required with a large team of developers as one developer could make a change that affects other developers, blocking work all other developers or worse, confusing developers if they have a issue (is it my change or someone else’s change that caused this?).

So ultimately you may have two to three environments plus one for each developer. This starts to cost a lot, not to mention the maintenance overhead. But there are lots of ways to save money here:

Automated IaC builds often only take minutes or hours to build: because you only maybe need production up 24 hours a day, 7 days a week, you can build the environments when you need them, dramatically saving infrastructure costs.

Developing and testing on a subset of data to reduce processing costs (or a subset of synthetic or anonymised data for security reasons). The downside to this is could miss some critical issues that are caused by a part of the data you did not test.

You may only build just two Stream Processing, Data Warehouses or Lakehouses - one for production and another to be shared across testing and development with different schemas. Maybe even just one: though it does make infrastructure changes more risky, trading off the increased risk for lower costs.

Some data vendors make switching environments fast and cheaper by offering “Shallow“ clones of your data: Snowflake Zero Copy Cloning or Delta Lake Shallow Cloning are two examples.

Speed vs. Reliability vs. Budget Trade-offs

Getting this balance right is difficult, as attaining 100% reliability in a complex platform probably means you are pushing virtually no changes and improvements to the platform - this can make a Data Platform out of date quickly and irrelevant to the business.

However, having high reliability is important for people to trust your platform and is critical when data is used in safety systems where quality of the data is a life or death issue.

Investing more in your DataOps processes can allow you to move fast but in a reliable manner. However, it can be expensive and may take a while to show value as your engineers adjust to any new processes or technology.

It finding the right balance isn’t easy, though can easier with metrics as discussed above.

Summary

This was a big post, as DataOps will be implemented differently in every organisation, and even every engineering team in a organisation and I wanted to cover all the major tradeoffs and decisions you make.

I hope this was useful and inspired you to build more efficient and reliable Data Platforms! Feel free to comment if you think I missed anything or have any extra advice on how to implement DataOps.

Sponsored by The Oakland Group, a full service data consultancy. Download our guide or contact us if you want to find out more about how we build Data Platforms!

Great article! Do you have any insights about when it's the right moment to start with DataOps and what components will be prioritized in different stages? I feel that having a small team and taking care of all of this is complicated, but you can prioritize different initiatives to add value to the team.