What is it like to build a Data Platform in GCP?

An interview with Principal Data Engineer Graham Thomas.

It was the Google Data and AI Summit last week, and while the product updates sounded interesting, I did not have a lot of experience and context with Google’s public cloud service, Google Cloud Platform (GCP), so I asked my colleague and expert in GCP, Graham Thomas, for help with an interview below.

Graham is a Principal Data Engineer at Oakland Group, with many years of experience in building data solutions in both GCP and AWS, working for large companies such as Meta, Betfair, and Bank of Ireland.

Jake: What are some great features of GCP that most people aren’t aware of?

Graham: There are loads! Such as;

Cloud Composer: As far as I know, no other cloud provider has a managed Airflow service as advanced as GCP. Apart from the convenience of the managed service itself, all of the nodes in the Airflow cluster are pre-loaded with the necessary tools and Python libraries to make Airflow integrate seamlessly with the GCP suite. This massively reduces the hassle of getting all the components to talk to each other, which is normally associated with a self-managed install.

[Editor’s note: Airflow is a commonly used open source data pipeline orchestrator.]

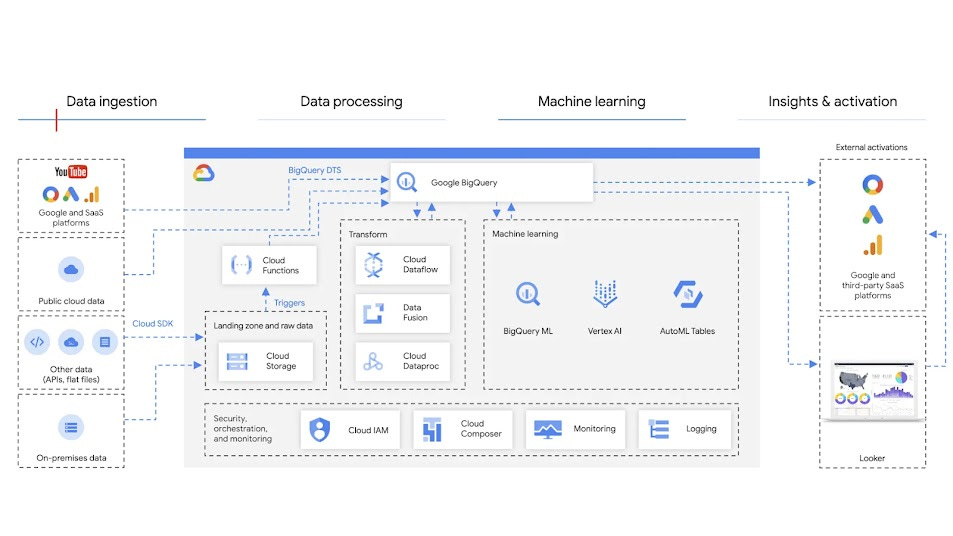

Integration with other Google services: For any business products such as Google Ads, Google Search, or Google Analytics, there are easy ways to stream or batch data from these services directly to Google Cloud Storage (GCS) or BigQuery just by linking your accounts together. This can save you the time and resources needed to create multiple data pipelines.

Experience: A lot of GCP's services are extensions of solutions they’ve built to service their own big data needs (e.g. search, maps, ads) so they’re battle-tested and built on real-world challenges.

BigQuery: Most people will be aware of BigQuery, but they might just see it as an alternative to AWS Redshift and Snowflake and not appreciate how awesome it actually is. I’ve put it through it’s paces a fair bit, and its never failed to deliver. My anecdotal observation is that it’s more performant and easier to use than Redshift but I haven’t done any scientific testing on that. In case you can’t tell, I’m a massive BigQuery fanboy 😊.

[Editors note: There are some cloud warehouse comparisons online, though realistically, it requires real world testing of your workload to know for sure.]

AI/ML: There are loads of AI/ML tools in GCP. Some are easy to get started with minimal effort (AutoML, BigQuery ML) and others are for the more seasoned data scientist (Vertex AI, Dialogflow).

Jake: What pains and issues are there in GCP that most people are not aware of?

Graham: Not to say there aren’t any, but I haven’t come across anything significant that stands out. I’ve only really come across some technical issues that are quite specific. For example, there was an issue using mysqldump with CloudSQL where extracting NULLs caused weird newline characters to be inserted. Despite this being what I would call a pretty significant bug, it took them years to fix. There’s also a performance issue with running dbt jobs in Composer.

Dataflow sounds perfect - it does batch and real-time in one service - does it live up to the hype?

Graham: Short answer - yes. Long answer - it's excellent for real-time processing. But for batch I'd much prefer dbt or Spark with Dataproc for heavier lifting. But Dataflow has a nice interface and it’s easy to monitor jobs and resume when they fall over. A common design pattern in GCP is Pub/Sub --> Dataflow --> BigQuery. Pub/Sub will give you the guarantee that you’ll get your data even if your Dataflow job falls over.

Do you think GCP works well for a data mesh and data products?

Graham: I have not had first hand experience of this, but Dataplex, a data cataloging service aimed at data meshes looks very inviting; it can, for example, share data by giving access to GCP services like BigQuery inside Dataplex.

Also, Starburst, a common federated query engine which can support data mesh architecture, recently announced integrations with Dataplex and BigQuery. This is really exciting news and will only serve to make the platform even stronger.

There is also Analytics Hub, which can share and sell data both internally and publicly in one shared library via BigQuery.

Why do you think GCP has a smaller market share than AWS and Azure?

Graham: Not sure, but I've always assumed it’s because AWS was the first cloud provider in the market and Azure has the connection with Windows and Office, which are familiar and comforting to a lot of businesses. Also, I think GCP is a fantastic platform where data and analytics are concerned, but I don’t know if it’s best in other areas. So if a business is already using AWS or Azure for their operational stuff, they’re probably unlikely to use GCP for analytics.

Does the smaller market share cause issues with using GCP?

Graham: Not that I’ve seen. They seem to bring out new features and improvements at a decent pace, so I wouldn’t say there’s a disadvantage to being in GCP from that perspective.

Sponsored by The Oakland Group, a full service data consultancy. We’re hiring!