Issue #25: Interview with Justin Borgman, CEO of Data Lake Analytics Platform, Starburst!

Plus: Terraform License Change, Data Quality Resolution Process Guide, Securing Data in Azure and Building Your Own Fivetran

Hi all, I interviewed Justin on several data topics, including startups vs. enterprises, LLMs, Data Mesh vs. Data Fabric (or a combination of both of them!), and balancing internal vs. external responsibilities as a data leader. Video and transcript below!

Also, we have the usual selection of great articles to share:

Hashicorp Makes Terraform Licence Less Open: What is the Impact?

Massive 70-Page Guide to the data quality resolution process

How to Create a Secure Azure Data Platform

After the Modern Data Stack: Welcome back, Data Platforms

The Art of Building Your Own ELT

The Complexities of Entity Resolution Implementation

Mind the Gap: Seamless data and ML pipelines with Airflow and Metaflow

Justin Borgman Interview

First, the video, but for those who prefer to read, I’ve edited the video transcript below:

Jake: Hi Justin, would you like to briefly introduce yourself?

Justin: I'm Justin Borgman, co-founder and CEO of Starburst. Starburst is the company behind an open-source project called Trino. Essentially, we provide a Data Lake analytics platform that allows you to query data that lives both in the lake and outside of the lake, so we can really query data that lives anywhere. And that's part of what sets us apart from other platforms in the industry.

Jake: You were once the founder of Hadoop startup Hadapt. So considering you were very involved in the Hadoop ecosystem, what do you think are the key lessons from the Hadoop era that can be applied today? Because I feel like it's file-based structure is something that has come back around again with Data lakes.

Justin: Yeah, I think you're absolutely right. I think that is the lasting legacy of Hadoop. I mean, really, the notion of a data lake was created during that period. I mean, the first data lakes were absolutely Hadoop data lakes, and that's where that term came to be.

I think the concept of a data lake is going to potentially live on forever because there are so many natural benefits, like the notion of leveraging open data formats, something that was pioneered by Hadoop with the creation of Parquet files and Avro files, and all these different ways of storing data in a columnar fashion to still get good performance. But laid out in this really inexpensive commodity storage system.

I think that's another key: the legacy of Hadoop is this notion of let's store as much data as we possibly can in the cheapest possible place, where we can store it, and that's either going to be, classically, the Hadoop file system or, more often, object storage, S3 on Amazon, Azure Data Lake Storage, or Google Cloud Storage. All of them represent inexpensive storage.

Lastly, I would say this notion of open architectures was really pioneered back then, at least as it pertains to data warehousing analytics, with the notion that not only are your data formats open and your file storage, you know, inexpensive and open, but also that the means for how you process that data should perhaps also be open.

And so you have the rise of technologies like Presto and Trino, which we’re the creators of, or Spark, you know, as another example, and the idea that these engines can all query the same open data formats, I think, is a really important aspect of the architecture that, again, lives on today.

Now, while Hadoop itself may be waning in popularity, you know, again, a lot of these concepts live on and have simply moved to the cloud and cloud object storage.

Jake: Then you moved into Teradata, which looks from the outside in like a very different kind of company than a startup.

Did working for Teradata alter your thinking about how to approach future startups when you came back to founding Starburst?

Justin: Yeah, I think I’ve got two great observations from my time at Teradata: first and foremost, that enterprise customers, where Teradata really is very strong, have particular needs and requirements that internet companies don't have; they need more robust access controls, they need better governance, they need metadata management and catalogue support, and, just Kerberos integration, LDAP integration, so many different sorts of features and capabilities that frankly, somebody like Facebook just doesn't really care about. But mainstream enterprise customers very much do. And so that left an impression on sort of what it means to be “enterprise grade”.

The other observation was maybe a little bit more specific to Teradata, which is that they were really the pioneers of this idea of an enterprise data warehouse, which was always about centralising all of your data into one central data warehouse. And yet, when I got there, I realised that not one of their customers actually did that. Not one of them actually had everything in the enterprise data warehouse; they all have these different data silos, Data Mart's different applications, device data, and multiple clouds on-premise.

There is so much heterogeneity inherent to the operation of their business that this enterprise data warehouse model seemed like it was actually impossible. And that was pretty interesting to see from the Teradata vantage point because, of course, at least at the time when my company was acquired, they had $2.7 billion in revenue and were the industry leader. So this was like the best-in-class, you know, version of the enterprise data warehouse.

And yet, even their customers didn't truly centralise everything. So that was really what started to get me thinking: we need to design an architecture that actually accommodates data living outside of this central data warehouse.

Jake: Yeah, I probably agree from my experience of seeing large companies try their best to centralise everything, but finding out that getting people to even use one cloud platform can be just too much work, let alone trying to get everyone to use one technology,

I would say there are two major opinions of Data Architectures out there right now: Data Fabric and Data Mesh. I'm wondering what your opinions are on them.

Justin: So I think, you know, first of all, I'll start with the commonality, which is that I think both of them recognise that you need to be able to account for decentralised data. And I think the differences lie in sort of how you actually put that into practise, how you govern that, and how you manage that.

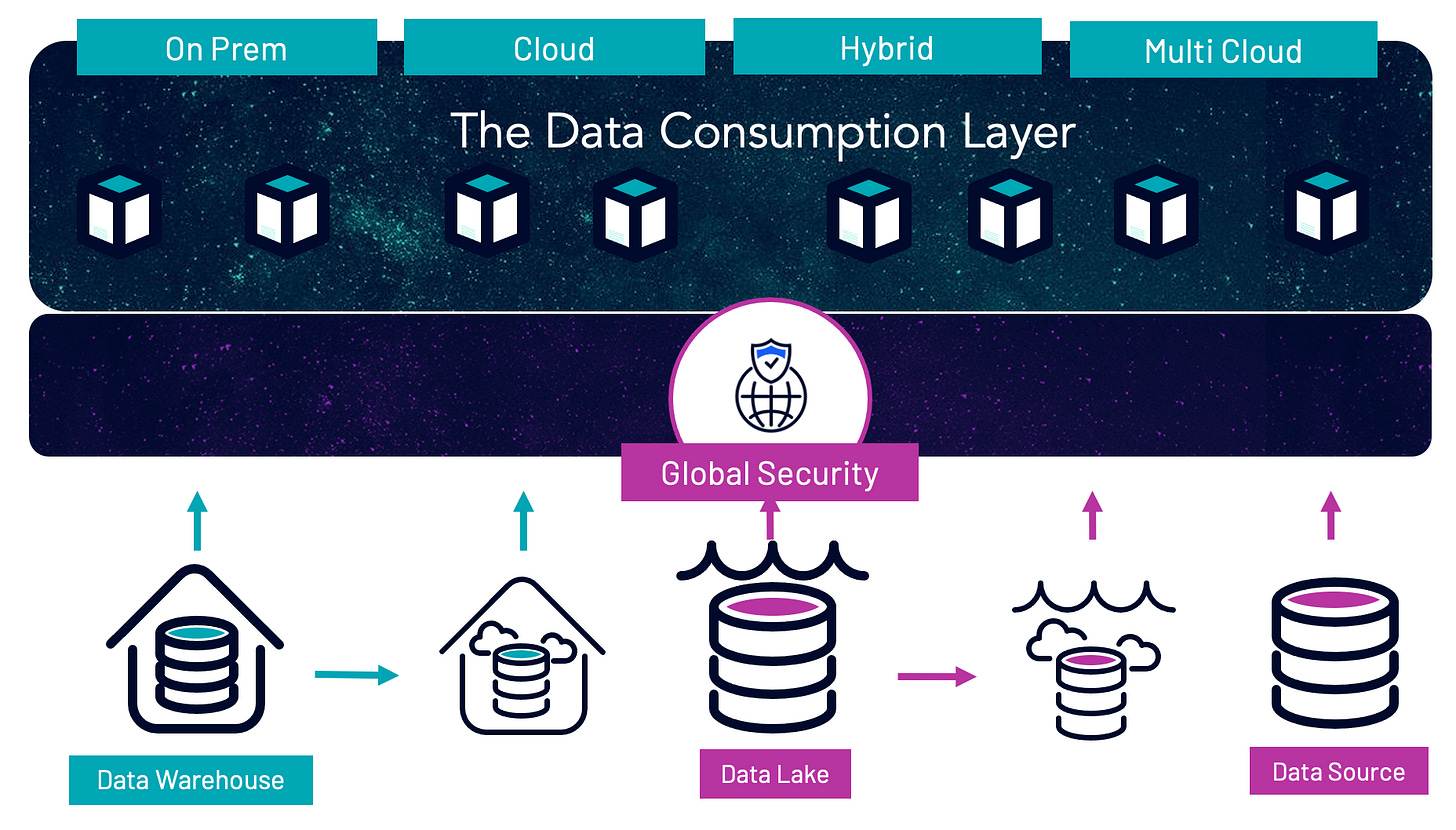

And at least the way that we think about it with our customers is that there are certain elements that you certainly want to centralise, and those are the things around security and access controls, management, and administration of the data platform. Those aspects likely need to be centralised.

And then I think it really depends on your organisational maturity in a way: your data maturity and data literacy. Perhaps in terms of who produces data products and who's responsible for data quality, and that perhaps influences just how much further you decentralise those processes or not. And that's more of a people-process challenge than a technology one.

And that's sort of the advice that I give customers, like, you need a technology that can handle your decentralised data, whether you choose to centralise the management, the administration, the governance, and even the data quality or not. It comes down to sort of the maturity of your decentralised teams, in the greatest embrace of a Data Mesh philosophy, you would have those domain owners being the ones who curate and create those data products, and they are responsible for that data quality. And I think there are a lot of benefits to that approach.

But I think you have to ask yourself, are we ready for that today? Do we have the people in place to facilitate that level of decentralisation? Or does it make more sense to sort of still have a centralised team that can simply reach out and access the decentralised data?

Jake: I have also seen some people talk about sort of merging them both together. So you get like a best of both worlds approach, almost like a Goldilocks solution, where you use the knowledge graph of the Data Dabric with decentralisation: I don't know if you've ever seen it or have thoughts on that?

Justin: Yeah, that's interesting. I certainly haven't heard it expressed that way before. Yeah, no, it's an interesting idea. I mean, I think it makes sense conceptually.

Jake: So moving on to data products, I'd say Starburst has been leading the charge in terms of Data Products, and is now becoming the de-facto way we look at breaking up our data in an organisation. So now that we're starting to see an adoption of Data Products in a lot of companies, have you seen any unexpected benefits or issues with Data Products, now that we're starting to see them come into production?

Justin: I think one unexpected, or maybe it shouldn't be expected, benefit that we see, or maybe it's more of a benefit than people expect, I guess, is that people are talking more and there's greater collaboration between consumer and producer than I think historically there has been because, you know, in the more traditional model, the data producer is sort of an anonymous, faceless, you know, human that we don't know as a data consumer. Right? And so if there are areas to be improved in that data product, whether it's adding additional fields or, or what have you, you know, I don't really even know how to begin to send that feedback back to the to the user, or even to ask questions of the data. Sometimes it's simply trying to understand the data that exists better.

But what data products were really facilitating that, that consumer producer interchange, if you will, an interaction and I think that's where people really start to see some of the unexpected benefits to your question of a data products model, data product centric model.

Jake: Yeah, I seen many struggle with the relationship between data producer and consumer, but there has been a lot of talk to improve that with data contracts. I’ve also been thinking, will we start seeing a blurring of the boundary between operational and analytical planes where they work closer together? Or will it stay as two distinct planes, as it might be better to keep them separate?

Justin: Yeah, no, that's a good question. I think that hopefully, we will see more collaboration between those two planes in terms of, you know, I guess the the interaction around like, what the schema should, should contain, right? Like the operational side very often is not necessarily aware of what the analytical side finds valuable in terms of that data capture. And so hopefully, there is greater collaboration between the two.

I don't think the actual underlying database systems will necessarily converge. I mean, every now and then, people talk about that. Well, we have an OLAP and OLTP database that does both. I think that's a little bit more of a challenge around the physics of just being read optimised versus write optimised.

But I think, hopefully greater collaboration between the humans involved on those two sides, because I do think there's a lot of value.

Jake: Moving on to Data Catalogs. With Starburst Gravity recently being released, what I've also started to notice is Data Catalog are being introduced into Data Processing products like Databricks and Unity Catalog, or even into Data Orchestration with Dagster. Do you think this is going to be an increasing trend where we see products lean more into data governance?

Justin: Yes, I think in conjunction with some of the points you brought up earlier around data being decentralised, the role of the catalogue becomes more important and more essential, almost like table stakes for the whole thing to work. Because you now need to account for data that lives outside of one single database. And so yes, I think this is a theme and is becoming increasingly a required capability for customers.

Jake: To be a bit sneaky, are you going to start to expand out Gravity as you go on over the years, or is that something behind a NDA?

Justin: Yeah, no, we do intend to expand it. I think our mission is to make distributed architectures as easy and seamless as possible for our customers. And that means that anything that we can do to make that a more streamlined experience is within scope from our standpoint, and that's everything from metadata management to access controls, which, of course, we now provide in Galaxy, our SaaS product, we provide attribute-based access control, role-based access control, and very fine-grained access control.

We think these are sort of table stakes. And being able to centrally define those and enforce them across all the different data sources you connect to has a lot of value for customers. So yes, I think all of those things are within scope.

Jake: There have been a few people in data saying that big data is dead. I don't know if you've seen the famous MotherDuck article claiming that? As someone who's from the original days of big data, you might know some people who say big data never actually happened. So good to have your take on whether it happen? Is it still happening?

Justin: Yeah, sure. I mean, I disagree with the idea that big data is dead. I mean, I think that it makes sense for MotherDuck to say that, and, look, I think DuckDB is a great database system. There will always be point solutions and specific use-case solutions for specific problems.

You know, one of my greatest influences from a database perspective is Mike Stonebreaker, who's a professor at MIT here in Boston, where I'm based. He is the creator of Ingress, Postgres, Vertica, and so many other different database systems; you won the Turing Award; he's probably one of the leading computer scientists of our time, certainly as it pertains to databases; and one of his most famous statements was that there's no one-size-fits-all database and that you're always going to have purpose built database systems. And I think that's absolutely true. And I think Duck DB is just an example of that.

But the idea that big data is dead, that we don't need to analyse all of our data, or something to that effect, I think is certainly not true. And I think with the increasing attention towards LLM and Generative AI, if anything, I would say big data is more alive than ever before, because your models are only as good as the data that you train them on. And very often, at least as it pertains to LLMs, more data is usually better to have an even more accurate model. So, I think understanding your business holistically is really important. So, you know, while we don't think you'll centralise all your data in one data warehouse, we think that having access to as much data as possible is really, really important.

Jake: Thanks, and that brings us nicely to my next question: I'm wondering what’s going to be Starburst's future with Large Language Models (LLMs)? Say, can you start integrating them into your products or finding other ways of communicating with them?

Justin: Yeah, I think so in a couple of ways. First of all, within the product itself, we've already actively prototyped. And something that we'll be introducing soon is the notion of natural language to SQL translation, and I think that's low hanging fruit for the industry. I don't think that we'll be alone in offering that; I think many, many, many vendors will likely offer that it's actually fairly straightforward to just be able to take natural language and turn those into SQL queries.

I think the harder stuff that is maybe even slightly more interesting because it's hard, is the automatic curation and creation of data products, back to your earlier question (on Data Catalog), you know, can we help facilitate and present data products that consumers are going to find valuable in their analysis. That's a little bit longer lead time, but it's also an area that we think is really interesting.

And then I'll say, broadly, that by virtue of us being able to provide access to all of the data in your enterprise, we want to be the access layer to help you, as the customer, build and train your own LLMs that are really specific to your business and derive a proprietary competitive advantage for you. Right? I think Chat GPT is a great technology to experiment with and have some fun with. But I think where the real value is going to come from enterprise customers is when they're training themselves using their own proprietary enterprise data.

And that won't be Chat GPT, most likely, right? You certainly don't want GPT training on your data. So I think those are the types of things that are very interesting from our perspective.

Jake: Yeah. I'm constantly interested to see how it's going to turn out, for example, where we are going to end up with like open source models, like we had with the previous ML cycles, or everyone's going to end up using the Open AI, which has some concerns with one company dominating.

Justin: And I will say, and obviously, we're big believers in open source in general, that I do think the open source LLM world is just getting going. And I think that'll be a really big force. I think, to your point, people aren't going to want to trust a vendor with their data. And I think these open-source models are getting really good.

Jake: Yeah, agree. Moving on to data leadership, how do you balance running a large business while still keeping an understanding of your customers needs and delivering the technology they need to run their businesses?

Justin: I think the key is, you have to find the right balance between time spent internally and externally. And what I mean by that is that spending time with customers, to me, is almost like oxygen; I need it, and it helps inform our product roadmap.

And, you know, it goes back to that old saying: if you ask somebody 120 years ago, what they wanted in terms of personal transportation, they would say I wanted a faster horse. You know, you have to really spend time with customers to understand they actually don't want a faster horse; they just want to get from point A to point B faster. And that maybe a car would actually be the better way to do that.

And so, you know, if you want to make revolutionary change, you know, disruptive change, and not just incremental change, you really have to spend a lot of time with your customers, empathising with and understanding their challenges at a very root level, because otherwise, you just listen to what they say.

And an average product manager can do that, you know, okay, faster horse, we'll go see if we can work on the horse’s diet or something and get 5% more speed out of a horse, but the person that really lives with that customer and spends a lot of time with them realises, you know, the real underlying requirements and can come up with creative solutions that might actually be more transformative to their lives.

So, to me, that's huge. And something that I don't think any leader should ever lose sight of. But at the same time, you also need to spend time internally; otherwise, you know, things don't get done. So it's about finding the right balance.

Jake: To me, there’s like a three-way thing where there are your internal customers, your current (external) customers, and the wider ecosystem where your prospective customers are, as well as keeping up with the latest trends coming around the corner.

Justin: I totally agree. It's a dynamic ecosystem that's always changing and evolving. And you need to be consciously aware that nothing in our space is ever static.

Jake: And finally, to finish: the future. So we’re already halfway through 2023, so I can't tell you what trends are going to emerge in 2023! So what trends do you think will emerge in the mainstream in 2024?

Justin: Well, I have to say, You're the first one to ask me for 2024 predictions. So I guess this will be my first 2024 prediction here in the summer. But I will say, you know, I think that, you know, going back to some of your questions around Generative AI, I think vector databases will be an interesting thing to watch.

I think open file formats, or, I'll say, modern open file formats or next generation open file formats like Iceberg, will gain a lot of momentum. We're seeing that already. I think that will continue.

I think Iceberg, you know, will win the table format war, maybe that's the most provocative thing I'll say today, and it will just become the dominant table format.

And then, you know, broadly, I'll just say I think increased focus on collaboration and data products, like, how do we create that? That collaboration, and that two-way dialogue between, you know, the data domain owner and the data consumer. So I think data products will be big next year as well.

HashiCorp's new license is still open source-ish

Most modern Data Platforms use Infrastructure as Code (IaC), which allows you to define your infrastructure as code in development and then run the same code in production, so you cut out errors from making manual changes in production.

Hashicorp’s Terraform is arguably the most common IaC solution, but they have changed their licence from allowing anyone to run their software for free in production to now only allowing companies who do not compete with Hashicorp’s products.

Now, 99% of companies will still be able to use open-source Terraform with this licence change, but it will impact one of the biggest strengths of Terraform: it’s community of tooling around it, which allows you to extend Terraform in a number of useful ways (integrations, security scanning, etc.). With this licence change, some of those tools may no longer be able to use open source Terraform, potentially killing off some of the community overnight.

In response, some of the Terraform community has started OpenTF, which is threatening an open source fork of Terraform that will split Terraform usage in the same way ElasticSearch and OpenSearch did, which is not great news long term.

I can also see lawyers for large enterprises vetoing Terraform, as it can be difficult to work out what exactly is “competing“ with Hashicorp products.

On the plus side, maybe I’ll get to use Pulumi more, which is fully open source and I suspect works better at scale than Terraform modules, with it being able to be written in a existing programming language.

Guide to Data Quality Resolution Process

Considering Data Engineers can spend on average, up to 50% of their time fixing Data Quality issues, Mark’s in-depth 70-page guide on how to best respond to these issues could be the most impactful guide you read! (Aside from mine 😉).

Please note that this is not a guide to preventing Data Quality issues; that’s a separate book in itself.

How to Create a Secure Azure Data Platform (Sponsored Article)

My employer, Oakland, has a lot of experience building secure Data Platforms in critical sectors like Telecoms and UK Government organisations, where only Data Platforms with the highest security get approved for production.

Oakland Senior Data Engineer Abigail Brown shares some of this experience on how to build a secure Azure Data Platform so you too can build a solution that has “Defence in Depth”.

I’ll also have a similar article that is platform neutral for my guide coming out soon!

After the Modern Data Stack: Welcome back, Data Platforms

There are quite a few “Modern Data Stack (MDS) is Dead!“ articles out there, but they're usually written by vendors trying to kill off MDS to increase their profits and therefore usually miss why MDS exists in the first place.

This is written by Timo Dechau, Founder of Deepskydata, a training platform for marketing data collection, who offers a refreshingly more vendor-neutral take by explaining why the Modern Data Stack took off and is very popular: but also why you wanted to adopt a more closed Data Platform.

I will say these “Post-Modern Data Stacks” likely suit small to medium teams who have a decent budget (you are putting an expensive layer on an already expensive data stack), but I can see the point of them if you fit this audience.

The Art of Building Your Own ELT

Ever wanted to build your own ELT / Integration / data movement software like Fivetran? Hugo Lu, Founder of Orchestra, show you how to build the basics of one.

The Complexities of Entity Resolution Implementation

Entity Resolution or Master Data Management (MDM) software is expensive, so how difficult would it be to build your own solution to match and deduplicate data?

Stefan Berkner, CTO of Entity Resolution software Tilores, points out that it can get very difficult indeed.

Mind the Gap: Seamless data and ML pipelines with Airflow and Metaflow

I’ve heard some complain that Airflow is not the best place to run for Machine Learning (ML) or Artificial Intelligence (AI) pipelines, as there are dedicated tools like Metaflow designed around that, giving you better integration with ML and AI software and models.

But Metaflow is not designed for Data Engineering pipelines, so you may end up doubling the maintenance of both by running them side by side.

But Michael Gregory and Valay Dave, Engineers at Astronomer and Outerbounds respectively, offer a better way(?) by running Metaflow inside of Airflow.

Sponsored by The Oakland Group, a full service data consultancy. Download our guide or contact us if you want to find out more about how we build Data Platforms!